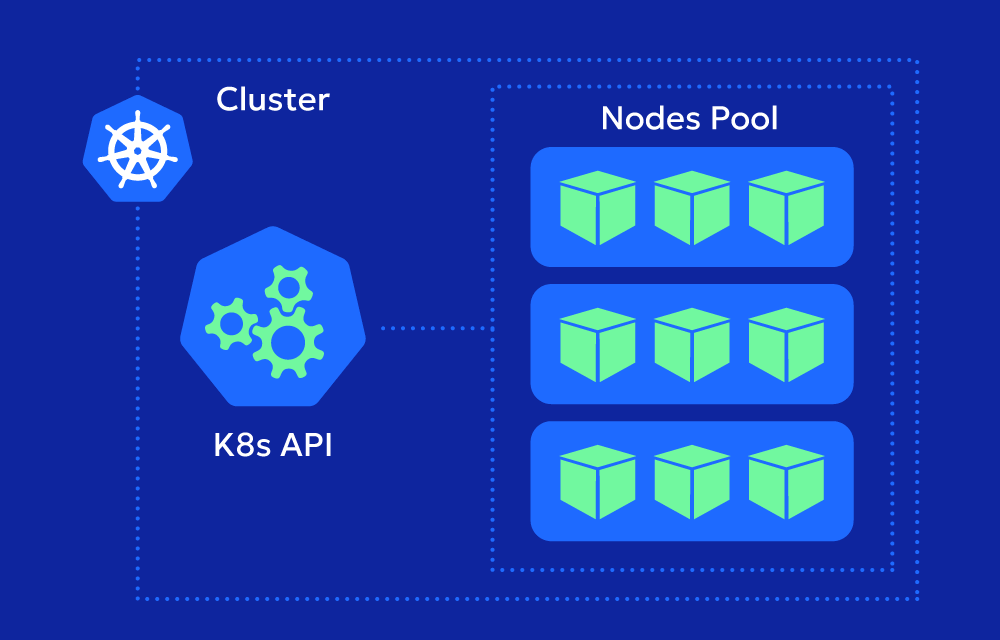

Kubernetes has become the hero of container orchestration in modern cloud-native architectures, mostly due to its flexibility and scalability. However, one area that’s often overlooked when it comes to K8s is networking. Specifically, the hidden costs associated with Kubernetes networking can significantly impact your cloud bill and operational efficiency.

This blog explores the common sources of hidden network costs in Kubernetes clusters and offers guidance on how to identify and reduce them.

Why Network Costs Stay Under the Radar

In Kubernetes, networking is abstracted away from most day-to-day workflows. Developers and platform teams focus on deploying services, not on where packets flow or how they’re billed. This abstraction is good for allowing engineers and developers to focus on their work, but it can also hide cost-driving behaviors. Whether it’s cross-zone traffic, excessive reliance on NAT gateways, or overlooked logging pipelines, these issues rarely surface until the bill arrives.

To bring these hidden costs to light, we’ve broken down some of the most common (and costly) Kubernetes networking patterns—along with tips to reduce their financial impact.

1. Cross-Zone Traffic

Cloud providers typically charge for data transferred between availability zones (AZs). Kubernetes does not inherently optimize for AZ-aware scheduling, which can lead to scenarios where pods communicate across zones, even when traffic could have stayed local.

Cost Impact: High volumes of east-west traffic between pods in different AZs can quickly accumulate significant inter-AZ transfer costs.

How to Mitigate:

- Enable topology-aware scheduling with

topologySpreadConstraintsto prioritize same-zone placement. - Design your services to prefer same-zone communication when possible.

- Monitor traffic flow with tools like VPC Flow Logs or Cilium Hubble.

2. Ingress/Egress via NAT Gateway or Internet Gateway

When workloads access the internet or other VPCs through a NAT Gateway or Internet Gateway, you’re billed for both ingress and egress data. In Kubernetes, services that call external APIs, download container images, or connect to third-party services can unknowingly incur these charges.

Cost Impact: NAT Gateway costs can be substantial, especially at high scale or with chatty applications.

How to Mitigate:

- Use VPC endpoints for AWS services to keep traffic within the AWS network.

- Cache commonly accessed data or APIs.

- Place critical services in private subnets with direct peering to reduce reliance on gateways.

- Use ECR pull-through to cache container images

3. Service Mesh Overhead

Service meshes like Istio or Linkerd introduce sidecar proxies (e.g., Envoy) that handle traffic routing, observability, and security. While valuable, they can effectively double the network traffic between pods.

Cost Impact: Every inbound and outbound request flows through the sidecar proxy, increasing internal data transfer.

How to Mitigate:

- Use mesh selectively. Consider namespace-level injection instead of mesh-wide auto-injection.

- Measure actual traffic volume and cost impact before adopting mesh cluster-wide.

- For latency-sensitive or low-throughput services, use native Kubernetes services or lightweight alternatives like Consul without sidecars.

4. Logging and Monitoring Traffic

Tools like Fluentd, Prometheus, and OpenTelemetry often send high volumes of metrics and logs across the network to central collectors.

Cost Impact: Constant data export from nodes to aggregation services or external observability platforms can rack up VPC egress charges.

How to Mitigate:

- Aggregate and filter logs at the node level.

- Tune scrape intervals and retention settings.

- Store logs and metrics locally when possible.

5. Misconfigured Load Balancers

Using external load balancers (like AWS ELB or NLB) for internal traffic can result in unnecessary egress charges. This often happens when developers default to creating LoadBalancer services without evaluating traffic patterns.

Cost Impact: Internal-to-internal traffic might loop out of the cluster through a load balancer.

How to Mitigate:

- Use ClusterIP for internal services.

- Use Internal type load balancers where external access isn’t required.

- Implement ingress controllers smartly.

6. Node Affinity and Its Indirect Costs

Node Affinity is used to influence pod placement on specific nodes, but it can have unexpected cost implications if misused.

Cost Impact:

- Can lead to poor bin-packing and underutilized nodes → more nodes than needed.

- May prevent autoscaler from scaling down empty nodes.

- Might force pods to avoid cheaper or spot instances.

- Increases cross-AZ traffic if not AZ-aware → more inter-AZ transfer charges.

How to Mitigate:

- Align affinity rules with cost optimization goals (e.g., prefer spot pools).

- Use soft (preferred) affinity instead of hard (required) when flexibility is acceptable.

- Revisit affinity settings during rightsizing or autoscaling reviews.

Bringing Network Costs into the Spotlight

Understanding and managing Kubernetes networking costs is essential to running efficient and cost-effective clusters. By being aware of these hidden cost drivers and implementing best practices, platform and DevOps teams can avoid unnecessary cloud expenses while maintaining high performance.

Start by auditing your current traffic flows, tagging cost centers, and using observability tools that give you visibility into where your network spend is going. With the right strategies in place, you can keep your Kubernetes clusters performant and budget-friendly.